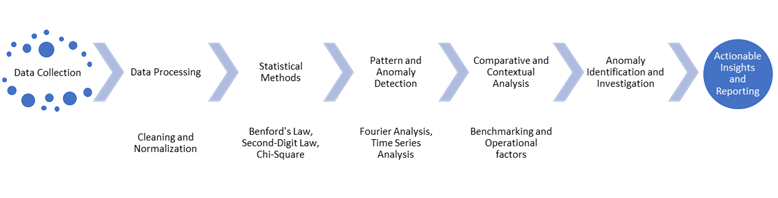

1.

Benford's Law

Benford's Law

states that in many naturally occurring datasets, the leading digit is likely

to be small. For example, the number 1 appears as the leading digit about 30%

of the time, while 9 appears less than 5% of the time.

·

Concept: numbers starting with 1 appear more frequently than those starting

with higher digits.

·

Application: it's a powerful tool for fraud detection and data authenticity

verification, especially in fields like accounting and auditing – fabricated or

manipulated data often deviates from this natural pattern.

·

Benefit: ability to uncover irregularities effortlessly, enhancing the

integrity of data analysis without extensive resources.

2.

Second-Digit

Benford's Law

While Benford's Law

focuses on the first digit, analyzing the distribution of the second digit can

provide additional insights.

·

Concept: The second digits in naturally occurring datasets also follow a

predictable distribution, albeit less skewed than the first digits.

·

Application: Comparing the observed second-digit frequencies against expected

values can highlight anomalies not caught by first-digit analysis.

·

Benefit: Enhances detection capabilities by adding an extra layer of scrutiny.

3.

Last-Digit Analysis

Investigating the

frequency and patterns of the last digits in your data.

·

Concept: In genuine datasets, last digits often exhibit a uniform

distribution. Fabricated data may show non-random patterns or repetitions.

·

Use Case: Detecting manipulated figures in financial statements or expense

reports where certain ending digits occur disproportionately.

·

Advantage: Helps identify human bias in number generation, as people might

subconsciously prefer certain numbers.

4.

Number Duplication

Analysis

Examines the

repetition of entire numbers within a dataset.

·

Mechanism: Excessive duplication of values can signal data copying or

fabrication.

·

Application: Useful in auditing where identical invoice amounts or transaction

values may indicate fraudulent activities.

·

Benefit: Flags suspicious patterns that deviate from expected variability.

5.

Relative Size

Factor (RSF)

Assesses the

proportion between large and small numbers in your dataset.

·

How It Works: Calculate the ratio of the largest individual transaction to the

second largest.

·

Indicator: An unusually high RSF may suggest an anomalous transaction that

warrants further investigation.

·

Benefit: Simplifies the identification of outliers based on magnitude

differences.

6.

Digital Analysis

Using Chi-Square Test

Statistically tests

the distribution of digits against expected frequencies.

·

Methodology: Apply the chi-square goodness-of-fit test to evaluate if the observed

digit distribution significantly deviates from the expected pattern.

·

Application: Can be used on any digit position, not just the first or second.

·

Benefit: Provides a quantitative measure to assess the likelihood of data

manipulation.

7.

Fourier Analysis

Utilizes frequency

domain analysis to detect periodicities and anomalies.

·

Concept: Transforms data into frequencies to identify hidden patterns or

irregularities.

·

Application: Detects repetitive patterns that might indicate systematic fraud.

·

Advantage: Effective for large datasets where time-domain analysis is

challenging.

8.

Time Series

Analysis

Analyzes data

points collected or recorded at specific time intervals.

·

Approach: Examines trends, seasonal patterns, and cyclical fluctuations to

identify anomalies.

·

Use Case: In ESG data, unexpected spikes or drops in resource consumption may

indicate reporting errors or operational issues.

·

Benefit: Incorporates temporal dynamics, enhancing detection of time-related

anomalies.

9.

Expectation-Maximization

(EM) Clustering

An unsupervised

learning technique to identify data clusters and outliers.

·

Mechanism: Models the data as a mixture of distributions and estimates

parameters to maximize the likelihood.

·

Application: Outliers are data points that don't fit well into any of the

identified clusters.

·

Advantage: Effective in handling incomplete data and identifying subtle

anomalies.

10. Peer Group Analysis

Compares entities

with similar characteristics to identify outliers.

·

Method: Benchmark an entity's data against its peers in terms of size,

industry, or geographical location.

·

Application: In ESG reporting, comparing similar facilities can highlight

discrepancies.

·

Benefit: Contextualizes data, making anomalies more apparent.

11. Regression Analysis

Examines the

relationships between variables to detect inconsistencies.

·

Concept: Builds models predicting expected values based on independent

variables.

·

Detection: Significant deviations from predicted values may indicate anomalies.

·

Application: Useful when there's a strong theoretical basis for variable

relationships.